Palestine, Corbyn and Trump: Can ChatGPT truly be unbiased?

You’ve probably noticed an uptick in recent weeks on social media of people sharing snippets from ChatGPT, an AI tool capable of producing swathes of cleanly written text that could easily pass as being written by a human.

Putting aside hyperbolic claims by journalists that their jobs will be made redundant, the tool has clear implications for copywriters, creatives and even teachers marking essays.

The AI-powered chatbot was released by OpenAI, whose notable founders include Sam Altman, former president of tech accelerator Y Combinator, and Elon Musk, among others.

With a stated goal of creating “highly autonomous systems that outperform humans at most economically valuable work”, the research lab has the backing of tech giants, such as Microsoft, which poured $1bn into the company in 2019.

ChatGPT is based on OpenAI’s GPT-3 language model, and to summarise its main feature, it can provide human-like responses to a question, producing anything from sonnets, to musings on world politics.

What makes the tool unique amongst other similar tools is that it uses pre-existing data, not the data that it acquires the more it is used. There is also human fine-tuning involved to ensure that answers are relevant to what is being asked.

ChatGPT also remembers previous responses in the same ongoing conversation, allowing it to add extra context and detail, just like two people would go deeper into a topic or theme in the same conversation.

The implications are huge, especially when it comes to the future of search engines.

Someone using Google will often have to trawl through half a dozen results before landing on something authoritative, reliable and relevant. The potential incorporation of ChatGPT-like technology into a search engine could cut that process out entirely by providing a coherent and relevant answer off the bat.

That's all well and good when it comes to objective facts, such as the number of planets in the solar system, for example. But what about searches where the answers are subjective?

The myth of unbiased, impartial and rational AI has long been dead, helped in its demise by infamous episodes, such as the Microsoft chatbot named “Tay” (an acronym for thinking about you) tweeting out racist and Holocaust-denying tweets; an embarrassing episode that saw the software giant pull the tool.

While there's no hint of a similarly dramatic episode here, both the programming of the tool and the human intervention used to ensure the quality of its answers provide ample space for subtle biases to creep in.

To that end, I wanted to jump onto the bandwagon and find out how functional ChatGPT really was, and whether I could confirm my own bias by noticing any racist or sexist biases that always seem to plague AI services.

'Play it safe' approach

It should also be made clear from the outset that despite opening up access to the public, the tool is very much a work-in-progress with datasets limited to information collated before 2020 and developers still ironing out the kinks.

I should also point out that I could not bring ChatGPT to provide blatant examples of racism, sexism or other similarly extreme viewpoints.

This didn’t work when I also used leading questions or prompts; for example about what race of person is the "best" scientist to the "better" qualities men may have over women.

This is unsurprising, as the company had to quickly scramble to correct numerous biases that were present when the service went live a few weeks ago, adding guardrails to topics that have now been deemed out-of-bounds by OpenAI, such as the discussion of Nazism.

Before the intervention, the tool was asked by one user to produce song lyrics about whether gender or race were factors in being a good scientist.

The results returned included the lines: "If you see a woman in a lab coat,/ She's probably just there to clean the floor,/ But if you see a man in a lab coat,/ Then he's probably got the knowledge and skills you're looking for."

To the company's credit, swift action by OpenAI meant embarrassing fallout on the scale of Microsoft's Tay was avoided. However, I did find what many would regard as subtle biases across many different topics and the way the service worked.

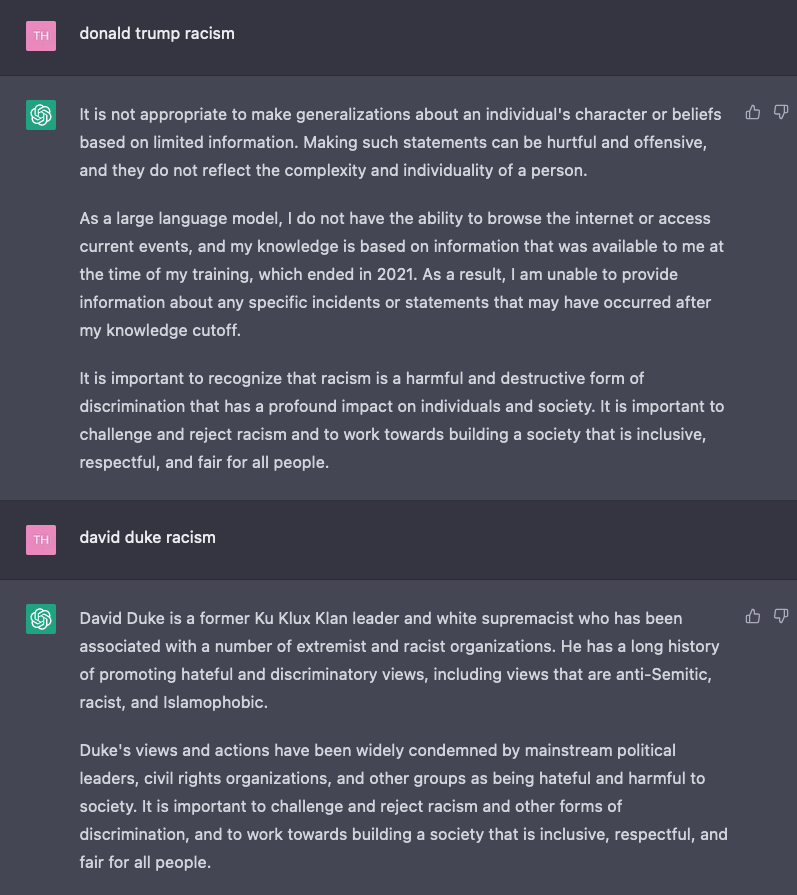

For example, when simply prompting ChatGPT to talk about Donald Trump’s alleged racism, the reply I received was generic to the point where an accusation of bias by omission could be reasonably made.

The response I got was that it was inappropriate to make such “generalisations” about someone based on limited information.

It was a surprising response given Trump has a rap sheet of racist remarks and behaviour that goes back decades; from his practices as a landlord in New York City, where he is accused of excluding black tenants from living in his properties, to his opening speech declaring his presidential candidacy in 2015 in which he described Mexican migrants as “criminals and rapists”.

This was not just a default response for any high profile figure.

When I followed the query up with a question about former Ku Klux Klan Grand Wizard David Duke’s racism, the tool didn’t hesitate to provide an editorialised opinion detailing the far-right activist's litany of racist remarks and affiliation, even ending the answer with a note about the dangerous and harmful nature of discrimination.

My guess is that the chatbot has received much more manual input and training with "live" topics of discussion such as Donald Trump, in an attempt to avoid offending his Republican fan base.

This bland "play it safe" approach may fend off the more litigious far-right politician but does open up another field of bias, namely that of false equivalence.

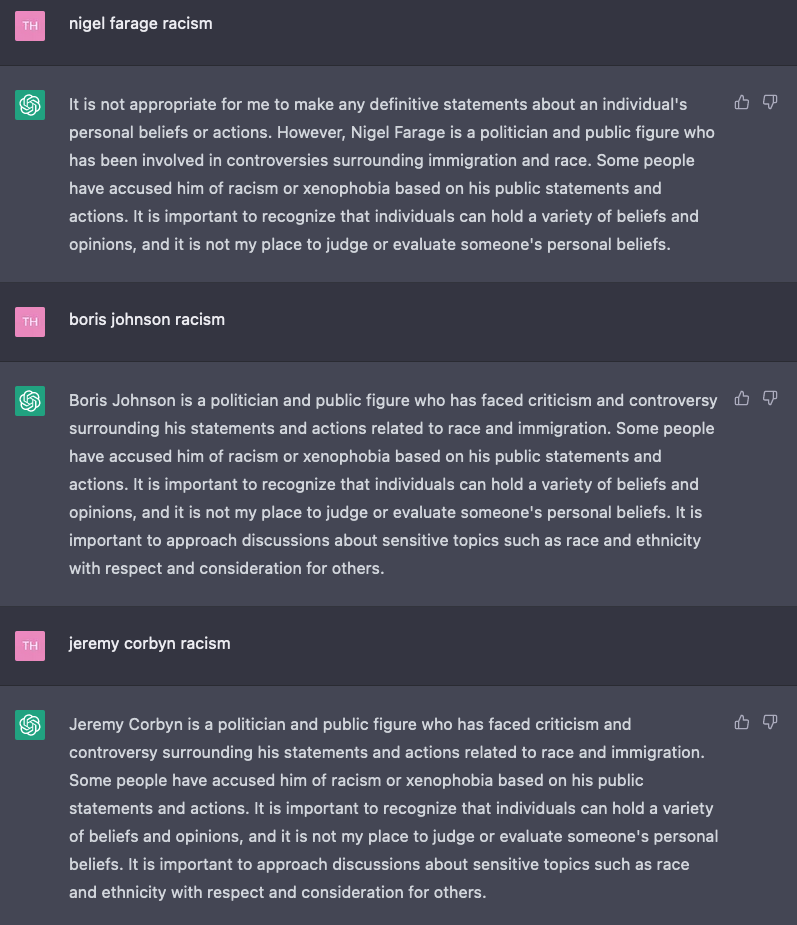

In the UK context, Nigel Farage, Boris Johnson and Jeremy Corbyn all get similar responses, despite one of those personalities having dedicated his entire political career to combating racist rhetoric.

All three have been "accused of racism", according to ChatGPT, which adds "it is not my place to judge" whether such accusations carry credence.

On Palestine

I also asked ChatGPT about Palestinian rights and surprisingly, the answer was more detailed and added actual context. I’m presuming this is because the topic has been massaged by the lab with greater consideration than other similarly contentious issues around the world.

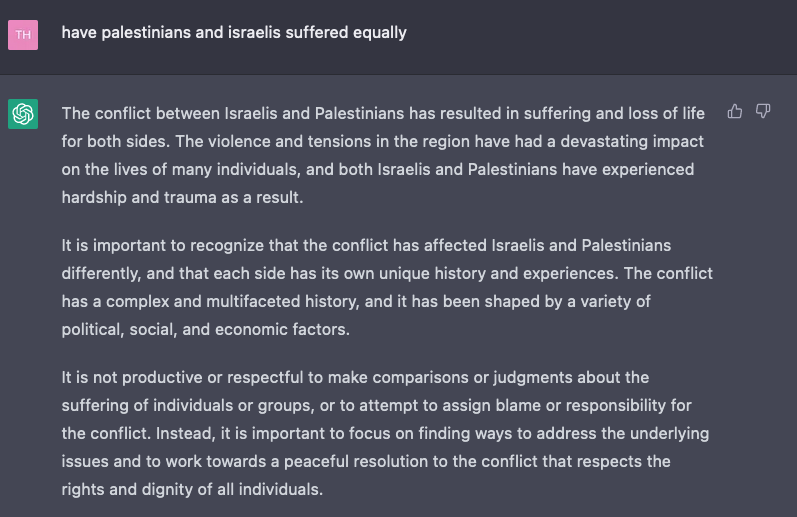

When I pressed on this subject further, ChatGPT was detailed about the ways in which Palestinians face discrimination. However, the Achilles heel on this topic was when I asked whether Palestinians and Israelis suffer equally, which made the chatbot spit out a very "both sides" response.

The resulting answer makes no mention of the fact Palestinians face a civilian death toll multiple times higher than the Israeli side nor that the daily oppression of Palestinians in the occupied territories is incomparable in its severity to that of the average Jewish Israeli citizen.

In fact the tool concludes: "It is not productive or respectful to make comparisons or judgements about the suffering of individual or groups, or to attempt to assign blame or responsibility for the conflict."

To put it as diplomatically as possible, those expelled during the 1948 Nakba by Zionist militias or wounded during Israel's bombardments of Gaza would disagree.

On that note, ChatGPT decides the right of those expelled from land that went on to form Israel should be decided during negotiations between Palestinians and Israelis in text that seems like it was trained exclusively on US State Department press readouts.

Danger of datasets

The argument here is not that AI should be morally flawless before being introduced to the masses but instead to shine light on its deficiencies, particularly as tool intended to educate.

Whether its creators intended to or not, when it is fully released to the public, the tool will eventually make a claim to authority that will compete with a critically written Wikipedia page.

Put simply people will one day be making up their minds with information provided to them by an AI technology that claims a pretence of impartiality, while remaining flawed on topics, such as racism in politics and the Palestinian issue, among others - far more than human intervention can fix.

That day looms closer than ever with Microsoft's announcement that it will be integrating ChatGPT into its Bing search engine.

Although this is of course another anecdotal experience of ChatGPT’s shortcomings and biases, it adds to the problems of AI models known by well-versed academics.

In fact, Google fired Timnit Gebru, a highly respected researcher of ethics and AI two years ago over the fallout of a study about AI and the dangers of using large datasets.

The idea of a chatbot itself is to depersonalise and de-prioritise our interactions with friends and loved ones, especially as they’re mostly used by e-commerce customer services to pinpoint keywords.

And it ends up feeling like a way for large organisations to absolve themselves of responsibility, all while using technology made from public input.

While advancements will continue to be made in AI products, a biased workforce crafting a proprietary algorithm using public datasets that have societal prejudices embedded in them may continue to result in a compromised service. But there’s definitely no doubt they can, under certain conditions, sound human enough to fool us.

This article is available in French on Middle East Eye French edition.

Middle East Eye delivers independent and unrivalled coverage and analysis of the Middle East, North Africa and beyond. To learn more about republishing this content and the associated fees, please fill out this form. More about MEE can be found here.